By Amnesty International

Meta must reform its business practices to ensure Facebook’s algorithms do not amplify hatred and fuel ethnic conflict, Amnesty International said today in the wake of a landmark legal action against Meta submitted in Kenya’s High Court.

The legal action claims that Meta promoted speech that led to ethnic violence and killings in Ethiopia by utilizing an algorithm that prioritizes and recommends hateful and violent content on Facebook. The petitioners seek to stop Facebook’s algorithms from recommending such content to Facebook users and compel Meta to create a 200 billion ($1.6 billion USD) victims’ fund. Amnesty International joins six other human rights and legal organizations as interested parties in the case.

“The spread of dangerous content on Facebook lies at the heart of Meta’s pursuit of profit, as its systems are designed to keep people engaged. This legal action is a significant step in holding Meta to account for its harmful business model,” said Flavia Mwangovya, Amnesty International’s Deputy Regional Director of East Africa, Horn, and Great Lakes Region.

One of Amnesty’s staff members in the region was targeted as a result of posts on the social media platform.

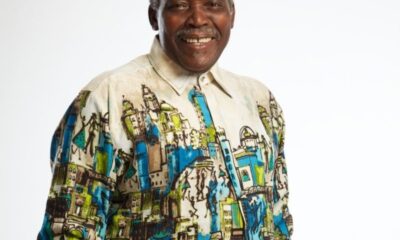

“In Ethiopia, the people rely on social media for news and information. Because of the hate and disinformation on Facebook, human rights defenders have also become targets of threats and vitriol. I saw first-hand how the dynamics on Facebook harmed my own human rights work and hope this case will redress the imbalance,” said Fisseha Tekle, legal advisor at Amnesty International.

Fisseha Tekle is one of the petitioners bringing the case, after being subjected to a stream of hateful posts on Facebook for his work exposing human rights violations in Ethiopia. An Ethiopian national, he now lives in Kenya, fears for his life and dare not return to Ethiopia to see his family because of the vitriol directed at him on Facebook.

Fatal failings

The legal action is also being brought by Abraham Meareg, the son of Meareg Amare, a University Professor at Bahir Dar University in northern Ethiopia, who was hunted down and killed in November 2021, weeks after posts inciting hatred and violence against him spread on Facebook. The case claims that Facebook only removed the hateful posts eight days after Professor Meareg’s killing, more than three weeks after his family had first alerted the company.

The Court has been informed that Abraham Meareg fears for his safety and is seeking asylum in the United States. His mother who fled to Addis Ababa is severely traumatized and screams every night in her sleep after witnessing her husband’s killing. The family had their home in Bahir Dar seized by regional police.

The harmful posts targeting Meareg Amare and Fisseha Tekle were not isolated cases. The legal action alleges Facebook is awash with hateful, inciteful and dangerous posts in the context of the Ethiopia conflict.

Meta uses engagement-based algorithmic systems to power Facebook’s news feed, ranking, recommendations and groups features, shaping what is seen on the platform. Meta profits when Facebook users stay on the platform as long as possible, by selling more targeted advertising.

The display of inflammatory content – including that which advocates hatred, constituting incitement to violence, hostility and discrimination – is an effective way of keeping people on the platform longer. As such, the promotion and amplification of this type of content is key to the surveillance-based business model of Facebook.

Internal studies dating back to 2012 indicated that Meta knew its algorithms could result in serious real-world harms. In 2016, Meta’s own research clearly acknowledged that “our recommendation systems grow the problem” of extremism.

In September 2022, Amnesty International documented how Meta’s algorithms proactively amplified and promoted content which incited violence, hatred, and discrimination against the Rohingya in Myanmar and substantially increasing the risk of an outbreak of mass violence.

“From Ethiopia to Myanmar, Meta knew or should have known that its algorithmic systems were fuelling the spread of harmful content leading to serious real-world harms,” said Flavia Mwangovya.

“Meta has shown itself incapable to act to stem this tsunami of hate. Governments need to step up and enforce effective legislation to rein in the surveillance-based business models of tech companies.”

Deadly double standards

The legal action also claims that there is a disparity in Meta’s approach in crisis situations in Africa compared to elsewhere in the world, particularly North America. The company has the capability to implement special adjustments to its algorithms to quickly remove inflammatory content during a crisis. But despite being deployed elsewhere in the world, according to the petitioners none of these adjustments were made during the conflict in Ethiopia, ensuring harmful content continued to proliferate.

Internal Meta documents disclosed by whistle-blower Frances Haugen, known as the Facebook Papers, showed that the US $300 billion company also did not have sufficient content moderators who speak local languages. A report by Meta’s Oversight Board also raised concerns that Meta had not invested sufficient resources in moderating content in languages other than English.

“Meta has failed to adequately invest in content moderation in the Global South, meaning that the spread of hate, violence, and discrimination disproportionally impacts the most marginalized and oppressed communities across the world, and particularly in the Global South.”

Billionaire Watch3 weeks ago

Billionaire Watch3 weeks ago

Startups4 weeks ago

Startups4 weeks ago

News4 weeks ago

News4 weeks ago

News4 weeks ago

News4 weeks ago

Bitcoin4 weeks ago

Bitcoin4 weeks ago

Naira4 weeks ago

Naira4 weeks ago

Forex3 weeks ago

Forex3 weeks ago

Treasury Bills4 weeks ago

Treasury Bills4 weeks ago